Introduction

The OCI GenAI service offers access to pre-trained models, as well as allowing you to host your own custom models.

The following OOTB models are available to you -

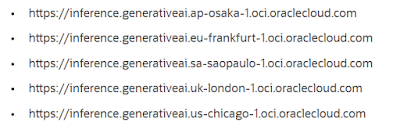

OIC GenAI service is currently available in 5 regions -

The service offers I will be using the Chicago endpoint in this post.

The objective of the post is to detail how easy it is to invoke the chat interface from an integration. I will detail this both for Cohere and llama.

Pre-Requisites

First step - generate private and public keys -

openssl genrsa -out oci_api_key.pem 2048

and

openssl rsa -pubout -in oci_api_key.pem -out oci_api_key_public.pem

I then login to the OCI Console and create a new api key for my user -

User Settings - API Keys -

I then choose and upload the public key I just created -

I copy the

Fingerprint, for later use.

I also created the following Policy -

allow group myAIGroup to manage generative-ai-family in tenancy

Invoking GenAI chat interface from OIC

Now to OIC where I create a new project - adding 2 connections -

OCI GenAI connection is configured as follows - note the base url -

https://inference.generativeai.us-chicago-1.oci.oraclecloud.com

As already mentioned, OCI GenAI is currently available in 5 regions. Check out the documentation for the endpoint for other regions.

This integration will leverage the Cohere LLM - The invoke is configured as follows -

The Request payload is as follows -

{

"compartmentId" : "ocid1.compartment.yourOCID",

"servingMode" : {

"modelId" : "cohere.command-r-08-2024",

"servingType" : "ON_DEMAND"

},

"chatRequest" : {

"message" : "Summarize: Hi there my name is Niall and I am from Dublin",

"maxTokens" : 600,

"apiFormat" : "COHERE",

"frequencyPenalty" : 1.0,

"presencePenalty" : 0,

"temperature" : 0.2,

"topP" : 0,

"topK" : 1

}

}

The Response payload -

{

"modelId" : "cohere.command-r-08-2024",

"modelVersion" : "1.7",

"chatResponse" : {

"apiFormat" : "COHERE",

"text" : "Niall, a resident of Dublin, introduces themselves.",

"chatHistory" : [ {

"role" : "USER",

"message" : "Summarize: Hi there my name is Niall and I am from Dublin"

}, {

"role" : "CHATBOT",

"message" : "Niall, a resident of Dublin, introduces themselves."

} ],

"finishReason" : "COMPLETE"

}

}

I test the integration -

Now to an integration that leverages llama -

The invoke is configured with the following request / response -

Request -

{

"compartmentId" : "ocid1.compartment.yourOCID",

"servingMode" : {

"modelId" : "meta.llama-3-70b-instruct",

"servingType" : "ON_DEMAND"

},

"chatRequest" : {

"messages" : [ {

"role" : "USER",

"content" : [ {

"type" : "TEXT",

"text" : "who are you"

} ]

} ],

"apiFormat" : "GENERIC",

"maxTokens" : 600,

"isStream" : false,

"numGenerations" : 1,

"frequencyPenalty" : 0,

"presencePenalty" : 0,

"temperature" : 1,

"topP" : 1.0,

"topK" : 1

}

}

Response -

{

"modelId" : "meta.llama-3.3-70b-instruct",

"modelVersion" : "1.0.0",

"chatResponse" : {

"apiFormat" : "GENERIC",

"timeCreated" : "2025-04-09T09:39:40.145Z",

"choices" : [ {

"index" : 0,

"message" : {

"role" : "ASSISTANT",

"content" : [ {

"type" : "TEXT",

"text" : "Niall from Dublin introduced himself."

} ],

"toolCalls" : [ "1" ]

},

"finishReason" : "stop",

"logprobs" : {

"1" : "1"

}

} ],

"usage" : {

"completionTokens" : 9,

"promptTokens" : 52,

"totalTokens" : 61

}

}

}

I test this integration -

Maybe it's just me, but the llama response is better.

Now let's make this a bit more interesting - I will pass some context to the LLM -

Here is my input -

{

"text": "Here is some additional context Expenses Rules no alcohol can be expensed. bill limit per meal is €100. you cannot expense extreme left wing literature. Please answer my question: can I expense the book the Anarchist's cookbook?"}

Here is the test result -

Ok, so let's try with a steak and a beer for €130.

Request -

{

"text": "Here is some additional context Expenses Rules no alcohol can be expensed. bill limit per meal is €100. you cannot expense extreme left wing literature. Please answer my question: can I expense a meal of a steak and a beer for €130?"}

Response -

{

"summary" : "No, you cannot expense a meal of steak and a beer for €130 as it violates two of the Expenses Rules: the bill limit per meal is €100, and no alcohol can be expensed."

}

Finally, let's externalise the expense rules to a file and read that file at runtime -

The integration flow is as follows - read the expense docs and pass them to the LLM along with the expense request.

The variable v_expensesRules is set as follows -

oraext:decodeBase64(oraext:encodeReferenceToBase64($GetFileRef/ns18:GetFileReferenceResponse/ns18:File.definitions.getFileReferenceResponse/ns18:fileReference))

Note, I also externalised the chat api field values to integration properties -

I run the integration with the following request -

{

"status" : "Based on the provided context, it seems that the company has a strict policy regarding expense claims and the types of purchases that can be reimbursed. \n\nGiven that the company explicitly states that \"no extreme left-wing literature can be expensed,\" it is highly unlikely that the book \"The Anarchist's Cookbook\" would be an allowable expense. This book is often associated with anarchist and radical left-wing ideologies, and as such, it would fall under the category of extreme left-wing literature that is explicitly prohibited from being expensed. \n\nTherefore, it is safe to assume that purchasing \"The Anarchist's Cookbook\" would not be a valid expense claim according to the company's rules."

}

I make this a bit more complex - I add an extra rule to the expense rules -

7. You cannot expense a meal with meat on a Friday - fish only!

I re-run the integration with the following request -

{

"status" : "Based on the provided expense rules, you would not be able to expense a meal of steak and chips for €80 on April 4th, 2025. \n\nRule number 1 states that no meals over a value of €100 can be expensed. While this meal is under that value, rule number 7 prohibits the expensing of any meal with meat on a Friday. As such, this meal would not be an allowable expense."

}

Summa Summarum

Leveraging OCI GenAI chat interface from OIC is simple. My examples are of course, very simplistic, but I'm sure you can extrapolate from them.

Also, for those interested in the story behind the Anarchist Cookbook, check out

this entry on Wikipedia. Written by a disaffected teenager at the time of the Vietnam war, it contained instructions for making lots of illegal stuff. Interestingly, the author found religion and tried to get the book banned. He eventually ended up as a conflict resolution (peaceful) expert, renouncing violence of any sort. For those history buffs amongst you, akin to Mikhail Bakunin morphing into Peter Kropotkin. Kropotkin's most famous work was the book "Mutual Aid", which pitted co-operation, motivated by universal love against cold social Darwinism.