Sunday, September 29, 2019

Sunday, September 22, 2019

#730 OIC - Google Tasks adapter

Here is a simple example of leveraging the adapter -

Naturally, I begin by creating the connection.

There are some pre-requisites for leveraging the google task api from OIC.

You need to create the OAuth credentials.

This you do via console.developers.google.com

I click Enable -

Now I click Create Credentials -

and generate the OAuth Client ID - (Client ID/ Client Secret)

I will also need to specify the scope - e.g. read only or read/write.

I add my client id and secret and then click Provide Consent -

I click Allow -

I now switch back to the OIC Connection definition and click Test and then Save.

Now to using it in an integration -

I have a REST interface with the following Request/Response -

{"taskList":"myTaskList", "taskName":"task", "description": "desc",

"dateDue": "2019-10-12T23:28:56.782Z"}

{"taskListId":"ABC", "taskId": "123"}

Essentially, I will be creating a new tasklist and adding a task to it.

The response contains the ID of tasklist and task.

I drop the google Task connection into the integration -

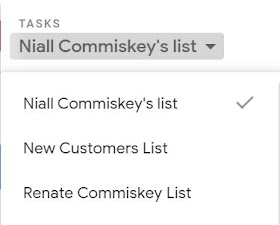

and select Insert Task List - this will add a new Tasklist to my existing list -

I then drop the google task adapter again and select Insert Task -

I will add the tasklist ID to the the Insert Task Request, to create the link between the two.

Maybe this is a good time to look at the task api docs here

So back to the integration -

Here is the mapping for createTaskList -

I set kind = "tasks#taskList"

title is set to the request field - taskList

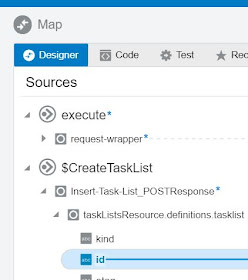

Here is the mapping for createTask -

I set kind = "tasks#task"

Note how I set the tasklistId under TemplateParameters.

This appears under TemplateParameters because the url is as follows -

This is set to the ID returned by the createTaskList request.

I activate and test with the following payload -

{"taskList":"myTaskList", "taskName":"Important Task", "description": "sehr wichtig!", "dateDue": "2019-10-12T23:28:56.782Z"}

I check in Google Tasks -

Naturally, I begin by creating the connection.

There are some pre-requisites for leveraging the google task api from OIC.

You need to create the OAuth credentials.

This you do via console.developers.google.com

I click Enable -

Now I click Create Credentials -

and generate the OAuth Client ID - (Client ID/ Client Secret)

I will also need to specify the scope - e.g. read only or read/write.

I add my client id and secret and then click Provide Consent -

I click Allow -

I now switch back to the OIC Connection definition and click Test and then Save.

Now to using it in an integration -

I have a REST interface with the following Request/Response -

{"taskList":"myTaskList", "taskName":"task", "description": "desc",

"dateDue": "2019-10-12T23:28:56.782Z"}

{"taskListId":"ABC", "taskId": "123"}

Essentially, I will be creating a new tasklist and adding a task to it.

The response contains the ID of tasklist and task.

I drop the google Task connection into the integration -

and select Insert Task List - this will add a new Tasklist to my existing list -

I then drop the google task adapter again and select Insert Task -

I will add the tasklist ID to the the Insert Task Request, to create the link between the two.

Maybe this is a good time to look at the task api docs here

So back to the integration -

Here is the mapping for createTaskList -

I set kind = "tasks#taskList"

title is set to the request field - taskList

Here is the mapping for createTask -

I set kind = "tasks#task"

Note how I set the tasklistId under TemplateParameters.

This appears under TemplateParameters because the url is as follows -

This is set to the ID returned by the createTaskList request.

I activate and test with the following payload -

{"taskList":"myTaskList", "taskName":"Important Task", "description": "sehr wichtig!", "dateDue": "2019-10-12T23:28:56.782Z"}

I check in Google Tasks -

Monday, September 9, 2019

#729 OIC AQ adapter

Queue Setup in Oracle Advanced Queuing

First step was to set up the Q in AQ.

CREATE type Message_typ as object (

subject VARCHAR2(30),

text VARCHAR2(80));

EXECUTE DBMS_AQADM.CREATE_QUEUE_TABLE (queue_table => 'objmsgs80_qtab',queue_payload_type => 'Message_typ');

EXECUTE DBMS_AQADM.CREATE_QUEUE (queue_name => 'msg_queue',queue_table => 'objmsgs80_qtab');

EXECUTE DBMS_AQADM.START_QUEUE (queue_name => 'msg_queue');

I then created a procedure to create a message -

CREATE OR REPLACE PROCEDURE P_AQ_ENQ AS

enqueue_options dbms_aq.enqueue_options_t;

message_properties dbms_aq.message_properties_t;

message_handle RAW(16);

message Message_typ;

BEGIN

message := message_typ('NC MESSAGE','Gruess Gott von AQ');

dbms_aq.enqueue(queue_name => 'msg_queue',enqueue_options => enqueue_options,message_properties => message_properties, payload => message, msgid => message_handle);

commit;

END;

Create the Integration in OIC

simple use case, de-queue message and write it to a file.

AQ getMsg configured as follows -

I set Tracking -

I now execute the plsql procedure to enque a message -

I check my ftp directory -

I check out the Monitoring/Tracking screen -

simple and succinct.

Wednesday, September 4, 2019

Tuesday, September 3, 2019

#727 OIC - Integrations leveraging Process for Error Handling Part 1

Here is a simple example of leverage Process for Human Intervention in Error Handling.

I have an integration that uses the connectivity agent to write to an on-premise file.

The use case is simple - json request contains customer details and these are simply written to a file.

CreateCustomer in the action that invokes the File Adapter.

The Global Fault Handler is configured as follows -

As you can see, the fault handler is calling a process.

This process will display the Error and the Customer payload.

The use case here - the person to whom this task is assigned views the error, and, if possible, takes corrective action.

In my simple example the connectivity agent is down. She restarts the agent and then res-submits the customer data to the integration.

Now to a test -

As you can see, the request to OIC has timed out.

Here is the Instance Tracking in OIC -

I login to Process Workspace and see the following task -

The error message is salient and to the point -

No response received within response time out window of 260 seconds. Agent may not be running, or temporarily facing connectivity issues to Oracle Integration Cloud Service.

I re-start the agent -

I then click Re-Submit -

Customer data is processed and the file is written -

Ok, this may not be the most appropriate use case for the process user.

So how about one that is.

I have a DB table - Customers -

working with the customer data from the previous example - we will insert a new record into the customer DB, mapping custnr to cust_id.

The integration has been amended as follows -

I added a scope for the DB Insert - I also added a call to our error handling process in the Scope Fault Handler -

I test with the following payload -

This returns a http 200 - OK.

I check in OIC Monitoring -

However, when I view this -

This is caught by the Scope Fault Handler -

Ergo, the process has been called.

I check Workspace -

Apologies for the error message in German, aber so ist das Leben!

the name value is too large for the column.

Now I can fix this error and re-submit.

Now the issue is 2 files have been written -

So let's change the integration somewhat and leverage only a Global Fault Handler, no more SCOPE

I test with the following payload -

this time I get an http 500 response in Postman

The Process has been called - I fix the name length issue and re-submit -

Integration completes successfully -

File is written -

DB is updated -

I have an integration that uses the connectivity agent to write to an on-premise file.

The use case is simple - json request contains customer details and these are simply written to a file.

CreateCustomer in the action that invokes the File Adapter.

The Global Fault Handler is configured as follows -

As you can see, the fault handler is calling a process.

This process will display the Error and the Customer payload.

The use case here - the person to whom this task is assigned views the error, and, if possible, takes corrective action.

In my simple example the connectivity agent is down. She restarts the agent and then res-submits the customer data to the integration.

Now to a test -

As you can see, the request to OIC has timed out.

Here is the Instance Tracking in OIC -

I login to Process Workspace and see the following task -

The error message is salient and to the point -

No response received within response time out window of 260 seconds. Agent may not be running, or temporarily facing connectivity issues to Oracle Integration Cloud Service.

I re-start the agent -

I then click Re-Submit -

Customer data is processed and the file is written -

Ok, this may not be the most appropriate use case for the process user.

So how about one that is.

I have a DB table - Customers -

working with the customer data from the previous example - we will insert a new record into the customer DB, mapping custnr to cust_id.

The integration has been amended as follows -

I added a scope for the DB Insert - I also added a call to our error handling process in the Scope Fault Handler -

I test with the following payload -

This returns a http 200 - OK.

I check in OIC Monitoring -

However, when I view this -

This is caught by the Scope Fault Handler -

Ergo, the process has been called.

I check Workspace -

Apologies for the error message in German, aber so ist das Leben!

the name value is too large for the column.

Now I can fix this error and re-submit.

Now the issue is 2 files have been written -

So let's change the integration somewhat and leverage only a Global Fault Handler, no more SCOPE

I test with the following payload -

this time I get an http 500 response in Postman

The Process has been called - I fix the name length issue and re-submit -

Integration completes successfully -

File is written -

DB is updated -